Telemetry Data Preprocessing for F1 Simulations

Explore the critical preprocessing steps for telemetry data in F1 simulations, enhancing accuracy and driving performance improvements.

In Formula One, raw telemetry data from hundreds of sensors is essential for simulations but unusable without preprocessing. Here's why preprocessing matters and how it's done:

-

Why Preprocess?

- Handle massive data volumes by filtering and focusing on key information.

- Clean sensor noise and errors for reliable results.

- Synchronize multiple data streams for accurate analysis.

-

Key Preprocessing Steps:

- Data Cleaning: Remove outliers, correct sensor drift, and filter noise.

- Time Alignment: Use GPS timestamps and lap markers to synchronize data.

- Standardization: Convert units (e.g., speed, temperature) and normalize formats.

-

Advanced Techniques:

- Machine learning enhances noise reduction and anomaly detection.

- Data quality control ensures accuracy with automated checks and expert reviews.

Telemetry preprocessing transforms raw data into actionable insights, enabling F1 teams to refine strategies and improve performance. The process is critical for accurate simulations and competitive success.

Inside F1 Telemetry: The Invisible Battle for Tenths of a Second

Main Preprocessing Steps

Preparing raw telemetry data for simulations involves several crucial steps. Each step plays a role in ensuring the simulations are accurate and dependable.

Data Cleaning Methods

Once raw telemetry data is collected, it must be refined to eliminate inaccuracies and prepare it for simulation. F1 cars generate vast amounts of telemetry data, which often requires thorough cleaning to be useful.

| Cleaning Method | Purpose | Implementation |

|---|---|---|

| Outlier Detection | Remove irregular sensor readings | Statistical analysis using the 3-sigma rule |

| Sensor Drift Correction | Address sensor drift over time | Rolling average calibration |

| Signal Filtering | Eliminate high-frequency noise | Low-pass Butterworth filters |

These methods help reduce noise while maintaining critical data points. Striking the right balance is essential to ensure important performance metrics remain intact.

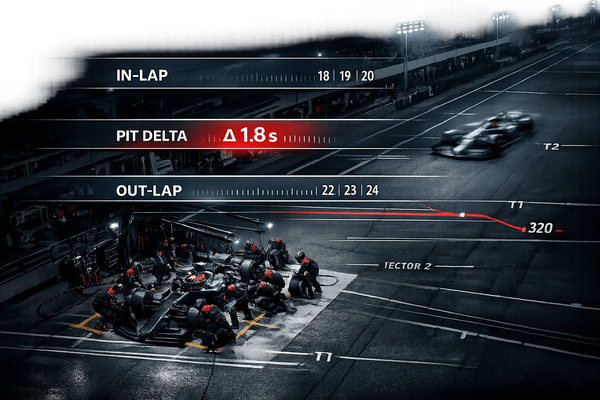

Time Data Alignment

Synchronizing sensor data is another vital step. Teams employ advanced alignment techniques to ensure all data points correspond accurately in time. This process involves:

- Using GPS timestamps to tag each data point and employing cross-correlation to align related signals.

- Adding lap markers to establish clear reference points within the data.

High sampling rates are crucial here, as they allow teams to capture key events, such as gear shifts and braking actions, with exceptional precision.

Data Format Standardization

Standardizing data formats and measurement units is critical for compatibility across simulation tools. Key conversions include:

| Measurement Type | Standard Unit | Conversion Formula |

|---|---|---|

| Speed | MPH | km/h × 0.621371 |

| Temperature | °F | °C × (9/5) + 32 |

| Pressure | PSI | Bar × 14.5038 |

| Distance | Feet | Meters × 3.28084 |

The standardization process ensures consistency by:

- Converting sensor data into uniform units.

- Normalizing sampling rates across datasets.

- Structuring the data into a unified format.

This rigorous standardization minimizes the risk of simulation errors and ensures the data is ready for the next stage: quality control.

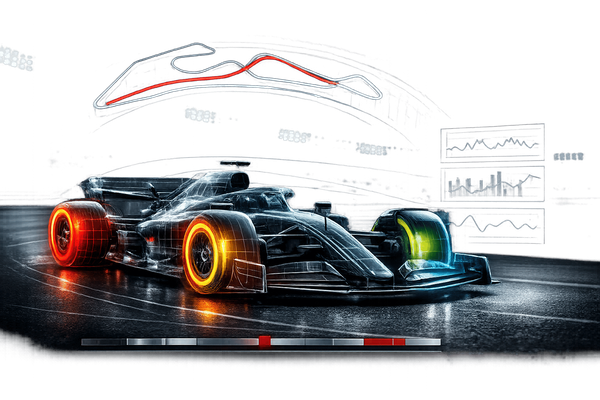

Specialized F1 Data Processing

Simplifying Complex Data

In F1 telemetry simulations, simplifying complex data without losing critical metrics is a must. Techniques like data transformation focus on highlighting the most important features, while principal component analysis helps condense massive datasets into more workable formats. By applying selective downsampling and carefully choosing relevant features, unnecessary parameters are removed, ensuring the essential details remain intact.

These approaches not only make the data more manageable but also pave the way for precise quality checks during simulation formatting.

Data Quality Control

Before telemetry data from an F1 car is ready for simulation, it undergoes strict testing and formatting. Teams rely on both automated systems and expert reviews to ensure the data is accurate and reliable for simulations.

Data Testing Methods

Telemetry data is tested through multiple layers of validation to catch any errors or inconsistencies. Machine learning models, trained specifically on F1 telemetry, play a key role in identifying potential issues. These models help by:

- Spotting statistical anomalies in sensor readings

- Checking consistency across related data channels

- Flagging unusual correlations between parameters

- Ensuring timing synchronization across all data streams

Cross-validation protocols are another essential step. These involve comparing data from different sensors that measure related variables. For instance, acceleration data is cross-checked with GPS readings and wheel speed sensors to confirm accuracy and consistency.

Once the data has passed validation, it’s formatted to meet the needs of simulation systems.

Simulation Data Formatting

Telemetry data is organized into standardized formats, complete with metadata that provides essential context. This metadata includes details such as:

- Circuit characteristics and conditions

- Vehicle setup and configuration

- Trackside environmental data

- Specifics of the test scenario

Key formatting requirements ensure the data is ready for simulation:

1. Temporal Alignment

Every data stream must be precisely time-stamped and synchronized. This applies to:

- Sensor outputs from various vehicle systems

- Track position markers

- Weather updates

- Driver input data

2. Metadata Structure

Each dataset must include detailed contextual information, such as:

- Type of session (practice, qualifying, or race)

- Track conditions, including temperature

- Tire compound and wear levels

- Fuel load and consumption rates

3. Quality Indicators

To ensure transparency and reliability, teams attach quality metrics to the processed data. These metrics include confidence scores, validation statuses, completeness markers, and logs of the processing history.

New Preprocessing Technologies

Advances in data quality control have paved the way for machine learning (ML) to improve simulation accuracy. In Formula One, telemetry data preprocessing has started to rely more on ML to refine data quality. These methods focus on reducing noise and spotting anomalies, ensuring cleaner and more reliable data for simulations.

How ML Improves Data Processing

Machine learning brings several benefits to telemetry data, including:

- Noise Reduction: ML algorithms help filter out unwanted noise, delivering cleaner and more precise signals for simulations.

- Anomaly Detection: AI models actively scan telemetry streams, identifying irregular patterns that could indicate sensor issues or unexpected performance changes.

Conclusion

Telemetry preprocessing plays a crucial role in Formula One simulations, directly influencing race strategies and overall performance. Over time, teams have transitioned from basic data cleaning to highly advanced methods, revolutionizing how real-time data is utilized during races.

By building on foundational techniques, modern preprocessing methods have significantly improved the accuracy of simulations. Managing the sheer volume of telemetry data - both historical and live - requires well-designed pipelines that ensure data integrity and reliability throughout the process.

Looking ahead, advancements in telemetry preprocessing are set to enhance simulation precision even further. The specialized techniques discussed earlier are shaping a framework that empowers teams to make smarter, more strategic decisions. These innovations align seamlessly with the analytical processes examined in previous sections.

From initial data cleaning to sophisticated processing, telemetry preprocessing transforms raw sensor readings into actionable insights that can determine race outcomes. As Formula One technology continues to evolve, the precision and effectiveness of telemetry preprocessing will remain a cornerstone of competitive success.

FAQs

How does machine learning improve noise reduction and anomaly detection in F1 telemetry data preprocessing?

Machine learning plays a critical role in improving noise reduction and spotting anomalies in F1 telemetry data. By analyzing massive datasets, these advanced algorithms can sift through the noise, filtering out irrelevant or faulty signals. This ensures that only accurate, reliable data feeds into simulations, which is essential for fine-tuning performance.

On top of that, machine learning models excel at detecting anomalies - like sudden spikes or unexpected dips in telemetry readings - by comparing real-time data with historical patterns. This capability helps teams identify and address potential problems quickly, safeguarding the quality of the data used for race strategies and performance evaluations.

What challenges arise when synchronizing telemetry data from multiple sensors in F1 cars, and how are they resolved?

Synchronizing telemetry data from various sensors in F1 cars is no easy task. Sensors operate at different speeds, use diverse data formats, and experience transmission delays. This can result in timestamps that don't match up and inconsistencies when trying to merge the data streams.

To tackle these issues, teams rely on advanced algorithms to align and interpolate the data, bringing everything together under a unified time reference. High-speed processors and efficient communication systems also play a crucial role in reducing delays and keeping the data accurate. The result? Reliable telemetry that supports both simulations and split-second decisions during races.

Why is standardizing data formats and measurement units crucial in F1 telemetry preprocessing, and how does it affect simulation accuracy?

Standardizing data formats and measurement units is a crucial step in F1 telemetry preprocessing. It ensures that performance metrics are consistent and reliable, eliminating potential issues like differing time formats or unit mismatches - think kilometers versus miles. Without this consistency, errors can creep into simulations, leading to flawed analyses and misinterpreted results.

Clean, uniform data is the backbone of accurate simulations, which aim to replicate real-world conditions as closely as possible. When inputs are standardized, teams gain a clearer picture of driver performance, can fine-tune car setups more effectively, and craft smarter race strategies. All of this contributes to better outcomes on race day.