How F1 Teams Optimize Telemetry Latency

How F1 teams cut telemetry latency using edge computing, tiered storage, Kafka, optimized networks and error correction for real-time race decisions.

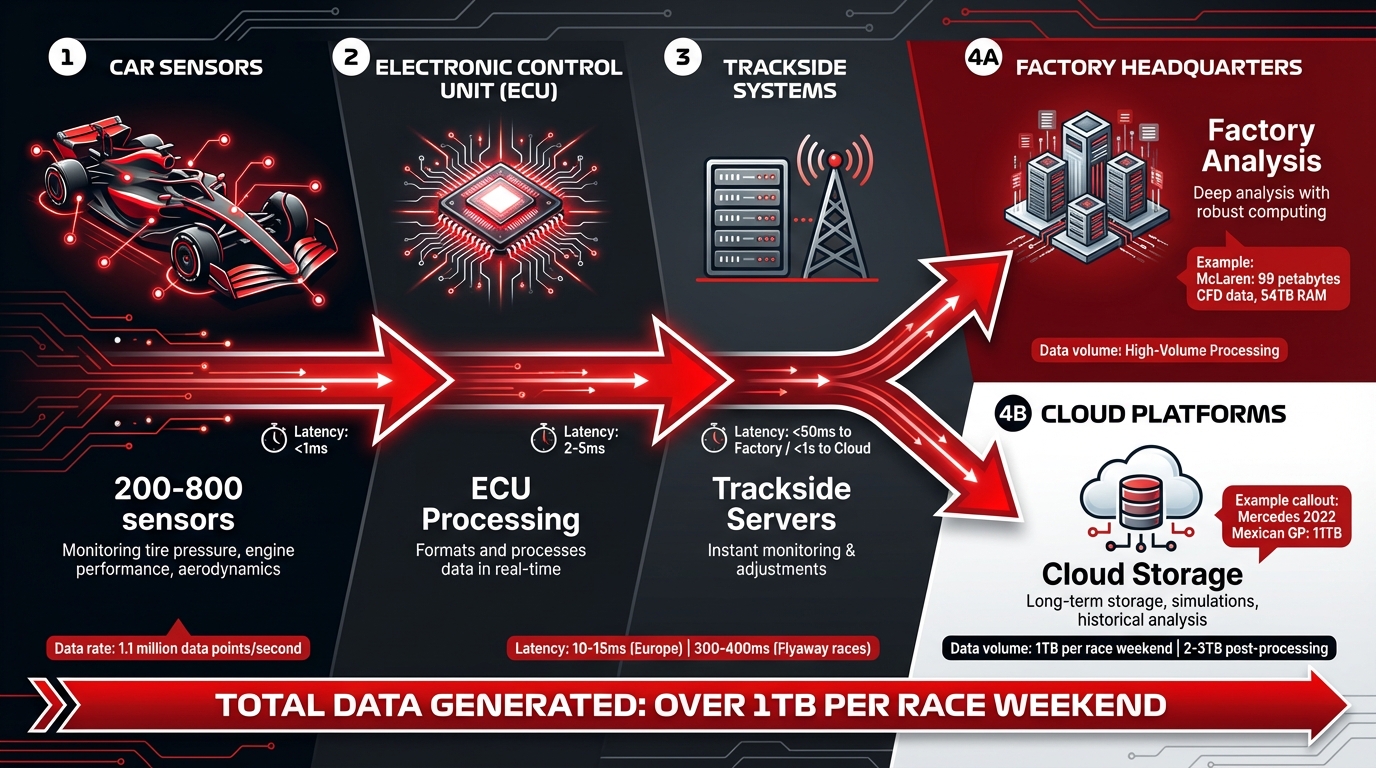

In Formula One, milliseconds matter. Telemetry latency - the time it takes for data from a car to reach engineers - can make or break race strategies. With cars generating over 1.1 million data points every second, teams must process and analyze this information almost instantly to stay competitive. Here's how they do it:

- Data Pipeline: Sensors send data to an Electronic Control Unit (ECU), then to trackside systems, and finally to the factory or cloud for deeper analysis.

- Latency Challenges: European races see latency as low as 10–15 milliseconds, while flyaway races can reach 300–400 milliseconds due to geographic distance.

- Solutions:

- Tiered Storage: High-speed SSDs at the track for immediate access, factory systems for deeper analysis, and cloud platforms for scalability.

- Real-Time Processing: Tools like Apache Kafka ensure data streams are processed and analyzed without delays.

- Network Optimization: High-bandwidth links, data compression, and replication strategies minimize transmission delays.

- Error Correction: Automated systems detect and fix corrupted data to maintain accuracy.

F1 teams constantly refine these systems to ensure data is delivered fast and accurately, enabling split-second decisions on strategy, pit stops, and car performance.

F1 Telemetry: Explained - Part two! 📡 #DellTechnologies

Understanding the Telemetry Data Pipeline

F1 Telemetry Data Pipeline: From Car Sensors to Race Strategy

How Telemetry Data Moves Through the System

The telemetry pipeline starts with an impressive array of 200–800 sensors monitoring key aspects like tire pressure, engine performance, and aerodynamics. These sensors sample data thousands of times per second, creating a massive stream of information. The raw readings are first sent to the car's Electronic Control Unit (ECU), which processes and formats the data in real time.

From there, the formatted data is transmitted wirelessly to trackside servers. Engineers at the track receive this data instantly, enabling them to monitor performance and make on-the-spot adjustments. The pipeline doesn’t stop at the track, though. The data is also sent to the team’s factory headquarters, where analysts use more robust computing systems to dive deeper into the information. Many teams additionally store telemetry data on cloud platforms, which support long-term storage, advanced simulations, and historical analysis. To put this into perspective, a single car generates over 1 terabyte of raw data during a race weekend, and that amount can grow to 2–3 terabytes after post-processing. For instance, during the 2022 Mexican Grand Prix, Mercedes-AMG Petronas collected over 11 terabytes of data. With such a fast and intricate flow of information, there are plenty of opportunities for delays to creep in.

Common Latency Bottlenecks

Delays in the telemetry pipeline can occur at several critical points, and teams work tirelessly to minimize them. The first potential bottleneck lies in the wireless transmission from the car to trackside servers. Signal interference, network congestion, or limited bandwidth can all slow down data delivery. Once the data reaches the trackside systems, disk I/O operations become a major factor. Writing huge amounts of data to storage while simultaneously reading it for real-time analysis can strain resources and introduce further delays.

Another challenge arises during transmission between trackside systems, factory headquarters, and cloud platforms. This issue becomes even more pronounced during flyaway races, where greater distances can increase latency. Additionally, the processes of data serialization and encoding add extra processing time. Teams face the tricky task of balancing fast writes to ensure no data points are missed with fast reads for immediate analysis. Continuous fine-tuning of these systems is crucial to keep everything running smoothly.

Building Low-Latency Storage Systems

Tiered Storage Solutions

Once the telemetry pipeline is streamlined, the next step for F1 teams is creating storage systems designed to minimize latency. To handle data with varying levels of urgency, teams adopt a tiered storage approach. The fastest layer operates directly at the track, where immediate access is non-negotiable. For instance, McLaren Racing brings a portable 140TB SSD data center to every race. These SSDs, housed in shock-mounted cases with Dell servers and Cisco switches, deliver the speed needed to manage live session data seamlessly.

The second tier is based at team factories, where analysts dive deeper into simulations and historical comparisons. McLaren Racing, for example, processes over 99 petabytes of CFD (Computational Fluid Dynamics) data on-premise, leveraging around 54 terabytes of RAM to tackle these compute-heavy tasks. For long-term storage and scalability, teams rely on cloud platforms. Red Bull Racing's CIO, Matt Cadieux, highlighted their transition to Oracle Cloud Infrastructure, saying:

"We were running on an obsolete on-prem cluster, and our models were growing so we needed more compute capacity, and we also needed to do something that was very affordable in the era of cost caps".

This multi-tiered architecture ensures data is stored and accessed in the most efficient way possible.

Optimizing Data Layouts

With a layered storage system in place, F1 teams fine-tune their data layouts to speed up retrieval. For telemetry data, time-series formats are particularly effective since sensors continuously stream measurements. Organizing databases to store sequential readings together allows teams to analyze trends more quickly.

Aston Martin's 2021 collaboration with NetApp illustrates how this approach can enhance efficiency. Using NetApp's FlexPod solution with SnapMirror technology, they capture snapshots of data by saving only the differences between successive states instead of duplicating entire datasets. This method significantly reduced their trackside equipment, consolidating multiple racks and 10–15 separate devices into just two servers - one for processing and storage, and another for redundancy. By transmitting only incremental changes, they minimize bandwidth usage while maintaining data integrity.

Balancing Write Speed and Query Performance

Organizing data effectively is just one piece of the puzzle. Systems must also handle the dual challenge of writing and querying massive amounts of high-speed data. Each car streams thousands of data points every second, requiring rapid sequential writes while still enabling quick, indexed queries for analysis. Commit logs are often employed to append new data sequentially, boosting write speeds. Meanwhile, indexed queries help teams avoid scanning through terabytes of raw data to find specific insights.

In 2022, both Mercedes and Red Bull Racing incorporated Apache Kafka into their real-time data pipelines. This technology enabled them to analyze millions of data points per second almost instantly. Striking this balance between write speed and query performance is essential for the split-second decisions that define F1 success.

Processing Telemetry Data in Real Time

Message Brokers and Telemetry Streams

When it comes to managing the constant stream of telemetry data, F1 teams rely on message brokers to keep things running smoothly. Apache Kafka, for example, plays a key role for teams like Mercedes and Red Bull Racing, efficiently handling millions of data points every second and distributing them to various analytical tools.

The beauty of message brokers lies in their ability to separate data production from data consumption. As sensors on the car continuously send readings, Kafka steps in to collect and buffer this data. This ensures that even if one analysis tool experiences a delay, the overall flow of information remains steady. Teams like Mercedes and Red Bull Racing use this setup to monitor crucial parameters like tire pressure and temperature in real time. They also keep tabs on driver biometrics, including pulse rate and blood oxygen levels, to make informed decisions on the fly.

Stream Processing for Live Analysis

Telemetry data isn’t useful straight out of the gate - it needs to be cleaned, validated, and aggregated before it can inform any decisions. F1 teams use stream processing frameworks to handle this transformation in real time. These systems catch errors and calculate derived metrics on the fly, ensuring the data is ready for immediate use. This step is critical, given the sheer volume of information generated by an F1 car. A single corrupted reading could lead to a poor decision, such as an unnecessary pit stop.

Stream processing frameworks continuously filter out anomalies to maintain data accuracy. The refined data then provides engineers with actionable insights - whether it’s adjusting brake cooling, improving fuel efficiency, or fine-tuning energy recovery in the hybrid system. These real-time insights can guide split-second decisions during a race, while also preparing the data for further storage or cloud-based analysis.

Trackside vs. Cloud Processing

F1 teams divide their data processing tasks between trackside equipment and cloud infrastructure, each serving a specific purpose. Trackside setups are all about speed, designed for ultra-low latency to support immediate race-day decisions. This is where teams get the instant insights they need for critical strategy calls.

On the other hand, cloud environments come into play for tasks that require more computational power and scalability. These include running simulations, comparing historical data, and conducting in-depth post-race analysis - activities that don’t need real-time responses. This hybrid approach mirrors the tiered storage strategy, balancing the need for quick, trackside decisions with the depth of analysis that cloud platforms provide. By leveraging both systems, teams not only maximize performance but also stay compliant with FIA cost cap regulations.

Optimizing Network and Data Replication

Once data storage and processing are optimized, the next critical step is ensuring that network and replication strategies are designed to minimize latency as much as possible.

High-Bandwidth Links and Network Routing

F1 teams rely on a blend of advanced connectivity technologies to transmit telemetry data from the racetrack to their factories. Their network setups incorporate dedicated fiber optic cables, microwave links, 5G, and satellite systems. This multi-layered approach ensures continuous data flow even if one connection faces disruptions.

A shared telemetry system, used by all teams, guarantees complete racetrack coverage while supporting encrypted data transmissions directly to each team's garage. Once the data reaches the garage, portable data centers - equipped with servers and storage - process it locally before relaying it back to the main factory.

Asynchronous vs. Synchronous Replication

The choice between asynchronous and synchronous replication hinges on the race location and network conditions. For European races, where network latency is minimal, teams often opt for near-synchronous replication. This method ensures that trackside and factory systems remain closely aligned, allowing engineers to work with nearly identical datasets in real time.

For overseas races, where latency can reach up to 300 milliseconds, asynchronous replication becomes the go-to option. This method allows trackside systems to keep processing and sending data without waiting for confirmation from the factory. To further optimize performance, teams use advanced compression and encoding techniques to reduce the size of transmitted data.

Compression and Encoding Methods

F1 teams handle an astonishing volume of telemetry data - about 1.1 million data points per second, translating to over 30 megabytes per lap. While the exact compression and encoding methods are closely guarded secrets, it’s known that all transmitted data is encrypted to ensure security. Teams must carefully balance the computational load of compression against the benefits of transmitting smaller data packets - a critical factor when every millisecond can influence real-time decisions. These finely tuned network and replication strategies play a pivotal role in enabling the split-second decisions that define success in F1.

Maintaining Data Accuracy at High Speeds

In Formula 1, high-speed telemetry demands flawless data transmission. Just one corrupted packet or a mistimed signal can throw off an entire race strategy.

Error Detection and Correction

To safeguard data integrity, teams rely on automated systems to scan data streams for irregularities, such as anomalies, missing segments, or corrupted packets.

One useful validation tool is the Elevation Chart, which compares fixed track elevation profiles across drivers. Any discrepancies - like horizontal shifts, jitter, or missing sections - indicate potential transmission errors. These detection methods depend heavily on precise timing to ensure accuracy.

Time Synchronization Across Systems

Telemetry data is captured thousands of times per second, making precise time synchronization absolutely critical. To prevent misalignments that could lead to incorrect event correlations, teams use GPS clocks and Precision Time Protocol (PTP) systems.

Monitoring and Debugging Latency Issues

Equally important is the ongoing assessment of system performance. Tools like Catapult's RaceWatch allow teams to visualize telemetry data in real time using embedded timestamps, which helps pinpoint latency bottlenecks.

Telemetry also serves as a powerful forensic tool. Chris Rothe, Co-founder of Red Canary, emphasizes its importance:

"Without the telemetry, we can't know what led to the alert condition. Did the temperature spike rapidly or was it a slow build-up? This context is critical to determining the right response".

This capability allows teams to "rewind the clock" after an issue arises, uncovering not just what went wrong but why it happened. These insights help refine systems for the future, ensuring continuous improvement.

Together, these strategies ensure that fast data delivery remains accurate, reinforcing earlier efforts to maintain low latency without compromising reliability.

Steps for Testing and Improving Latency

Reducing telemetry latency is all about measuring, identifying issues, and making targeted adjustments. F1 teams rely on a systematic approach to keep their data pipelines running as efficiently as possible.

Measuring Baseline Latencies

Before making any tweaks, teams need to know where they stand. Engineers measure how long it takes for telemetry data to travel from sensors to the display screen. These baseline measurements can vary depending on the track. For instance, at European circuits, latency often hovers between 10–15 milliseconds, while more remote locations can see delays of up to 300 milliseconds.

To confirm these baselines, teams use tools like the Elevation Chart, which helps spot misalignments or jitter by comparing data against the fixed elevation profile of the track.

Finding and Fixing Bottlenecks

After establishing baseline latencies, the next step is identifying where delays are happening. Engineers dig into telemetry streams and visually inspect the system to locate issues like slow storage write speeds, network congestion, or processing delays. When bottlenecks are found, teams implement specific fixes - this could mean upgrading hardware, tweaking software, or optimizing network routes. It’s all about addressing the weak links to improve overall performance.

Continuous Optimization

In the fast-paced world of F1, reducing latency isn’t a one-time task; it’s an ongoing effort. Teams refine their systems throughout the season and even during the off-season. For example, the BWT Alpine F1 Team processes up to 50 billion data points every week across manufacturing, testing, and race operations. This massive dataset helps them identify small but meaningful changes that add up over time.

Cloud computing has been a game-changer in this process. Computational Fluid Dynamics (CFD) simulations that used to take 60 hours can now be completed in just 12 hours by leveraging AWS's high-performance computing instances. This quicker turnaround enables teams to test more configurations, creating a feedback loop that drives continuous improvement.

Conclusion: The Advantage of Optimized Telemetry

In Formula One, every fraction of a second counts - quicker telemetry systems enable teams to make split-second decisions that could determine the outcome of a race. Mastering telemetry latency allows teams to perfectly time pit stops during safety car periods or tweak energy recovery strategies in real time. The contrast between a 10-millisecond latency at European circuits and a 300-millisecond delay at more remote locations can be the difference between seizing a fleeting opportunity or letting it slip away.

Streamlined low-latency pipelines also slash CFD simulation times from 60 hours to just 12, significantly speeding up the development process. This not only accelerates the creation of new components but also enhances race-day tactics. Engineers can evaluate more configurations, validate models faster, and roll out performance upgrades ahead of their competitors.

Real-time telemetry fuels the strategic insight that sets winning teams apart. For example, at the 2022 Dutch Grand Prix, the BWT Alpine F1 Team used real-time radio transcription to execute a perfectly timed pit stop for Fernando Alonso, securing eight valuable championship points.

As discussed, these technological advancements are the foundation of race-winning strategies. Investments in tiered storage systems and real-time data processing enable faster decision-making, smarter strategies, and quicker car development. Together, these innovations highlight how optimizing telemetry is key to achieving success both on the track and behind the scenes.

F1 Briefing dives deep into the technical innovations shaping modern Formula One, unraveling the complex systems that give teams their competitive edge.

FAQs

How do F1 teams minimize data latency during long-distance races?

F1 teams encounter major latency hurdles during flyaway races because of the physical distance between the car’s sensors, trackside engineers, and those stationed back at headquarters. To overcome this, they rely on on-car edge computing to handle telemetry data efficiently. This system compresses and pre-processes the data directly on the car, filtering out less critical information while giving priority to key metrics like engine temperature, tire wear, and driver biometrics.

The processed data is then sent via high-frequency microwave links to local servers located in the pit lane. These servers use low-latency streaming platforms to ensure rapid processing. In the garage, portable "edge data centers" take it a step further by running advanced analytics, including lap-time predictions and tire degradation assessments. From there, only the most essential insights are transmitted to the team’s headquarters. This combination of edge computing, real-time data sharing, and distributed processing ensures near-instantaneous data flow, enabling engineers to make crucial decisions in real time, even when operating thousands of miles away.

How do F1 teams use Apache Kafka to process telemetry data?

Apache Kafka is a game-changer when it comes to handling the massive flow of telemetry data generated by Formula 1 cars during a race. Each car transmits millions of sensor readings every second - covering metrics like speed, tire temperature, and aerodynamic loads. Kafka ensures this data is delivered in sequence, buffered efficiently, and scaled to meet the high-pressure demands of race day. Engineers rely on this real-time stream to make split-second decisions while also preserving the data for detailed analysis after the race.

What makes Kafka even more powerful is its seamless integration with stream-processing tools. These tools help teams cut through the noise, add meaningful context to the data, and trigger alerts almost instantaneously. The refined data fuels live monitoring dashboards and gets stored for in-depth analysis later. This setup allows teams to transform raw telemetry into actionable strategies in a matter of milliseconds.

How do F1 teams ensure accurate telemetry data during high-speed transmissions?

F1 teams rely on highly sophisticated systems to ensure telemetry data remains precise, even when cars are hurtling down the track at over 186 mph. To achieve this, onboard systems use error-detecting codes and duplicate key parameters, enabling them to instantly identify and correct any corrupted data. Data transmission is backed by multiple redundant channels, such as high-speed radio and fiber links, so if one pathway fails, another seamlessly takes over. Additionally, timestamps synchronized with the car’s master clock help pinpoint and resolve issues like out-of-order or missing data.

Once the telemetry data reaches the team, it undergoes rigorous validation through real-time processing. Engineers cross-check the incoming data against physical models and simulations, flagging any irregularities. This layered system of checks ensures that the information teams rely on is both accurate and dependable. With this level of precision, engineers and strategists can make split-second decisions confidently, knowing the data they have is reliable and ready for action.