Reinforcement Learning in F1 Strategy

Reinforcement learning is reshaping Formula 1 strategies, enabling teams to make data-driven decisions in real time for optimized performance.

Formula 1 teams are transforming race-day strategies with reinforcement learning (RL). This machine learning method enables systems to learn and improve decisions - like pit stops and tire choices - based on real-time race data. Unlike traditional simulations or human intuition, RL dynamically processes variables like weather, tire wear, and competitor actions, offering faster and more precise recommendations.

Key points:

- RL systems analyze live telemetry to optimize tire management and pit timing.

- They adapt to unpredictable events like weather changes or safety cars.

- RL models, such as RSRL, have shown measurable performance improvements in simulations.

- Challenges include data quality issues, system transparency, and adapting to diverse tracks.

Looking ahead, RL could shape energy recovery strategies, fuel optimization, and even driver training. Collaboration between teams and researchers is advancing RL's potential while addressing current limitations.

How Data and Generative AI is used in Formula 1 (w/ Bernie Collins)

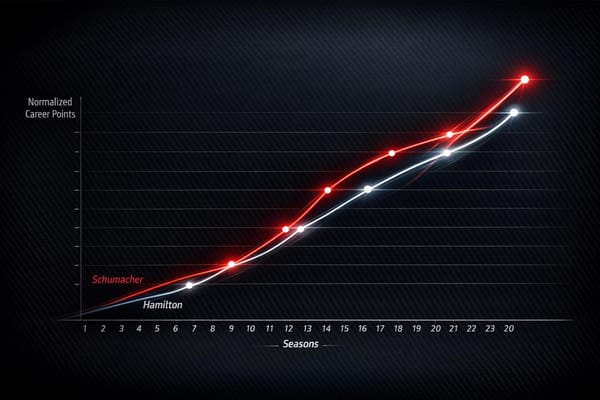

How F1 Strategy Optimization Has Changed

The way Formula 1 teams approach strategy has undergone a dramatic transformation over the years. What began as a reliance on intuition and experience has evolved into a highly sophisticated, data-driven process. Today, teams juggle thousands of variables in real time, using advanced computational tools to make decisions that were once based on gut instinct. Let’s dive into how this shift unfolded and the key technologies driving it.

Transitioning from Instinct to Data

For much of F1’s history, race strategies were shaped by seasoned experts who relied on experience and well-practiced rules of thumb. Decisions about pit stops, tire selections, and responses to race-day surprises were made based on years of accumulated knowledge. While this approach had its merits, it often fell short in the face of the sport’s increasing complexity. Real-time factors like tire degradation, sudden weather changes, and fuel consumption presented challenges that were simply too vast for human strategists to process effectively during a race.

The game-changer came with the advent of telemetry. As F1 cars began generating enormous amounts of real-time data, it became clear that human analysis alone couldn’t keep up. This influx of information paved the way for computational tools to take center stage in strategy development.

Monte Carlo Simulations and Machine Learning

The adoption of Monte Carlo simulations marked a pivotal step forward in strategy optimization. These simulations allowed teams to model thousands of potential race scenarios, factoring in variables like safety car probabilities, tire wear, and weather shifts. By quantifying risks and rewards, teams could make more informed decisions. However, the downside was the high computational demand, which limited the ability to adjust strategies during races.

As computing power improved, machine learning entered the scene, offering a new level of precision. By analyzing vast amounts of historical data, machine learning algorithms could identify patterns and make predictions about lap times, race outcomes, and even competitor behavior. This approach allowed teams to anticipate and respond to race developments with greater accuracy. Unlike Monte Carlo simulations, machine learning brought a level of flexibility that made it more effective in the ever-changing conditions of F1 racing.

Reinforcement Learning Enters F1

Reinforcement learning (RL) has emerged as the latest frontier in F1 strategy optimization. Unlike earlier methods, RL thrives in dynamic environments, making it particularly suited to the unpredictable nature of racing. RL models, such as Deep Q-Networks (DQN) and Deep Recurrent Q-Networks (DRQN), simulate actions and outcomes in real time, enabling teams to adapt their strategies as conditions change.

For instance, a DRQN-based strategy predictor tested on F1 race data from 2021 to mid-2024 demonstrated its ability to recommend tire compounds and pit stop timings that closely mirrored professional team decisions. By continuously learning and adjusting to variables like track conditions, weather, and competitor movements, RL has proven capable of outperforming static, pre-planned strategies. This adaptability highlights RL’s growing role in handling the unpredictable nature of F1.

| Approach | Adaptability | Computational Cost | Real-Time Use | Interpretability |

|---|---|---|---|---|

| Human Expert | Low | Low | High | High |

| Monte Carlo Simulation | Medium | High | Low | Medium |

| Machine Learning | High | Medium | Medium | Medium |

| Reinforcement Learning | Very High | Medium | Very High | Improving (with XAI) |

The evolution from instinct-driven decisions to data-powered strategies has reshaped F1 in remarkable ways. Reinforcement learning, in particular, is pushing the boundaries, offering a glimpse into a future where strategy optimization reaches new heights of precision and adaptability.

How F1 Teams Use Reinforcement Learning

Reinforcement learning (RL) is making waves in Formula 1, especially when it comes to in-race decision-making. From fine-tuning tire strategies to adapting on the fly during unpredictable events, RL is reshaping how teams approach the complexities of race day. Its impact is particularly evident in areas like tire management and real-time strategy adjustments, where its ability to process vast amounts of data and make split-second recommendations is proving invaluable.

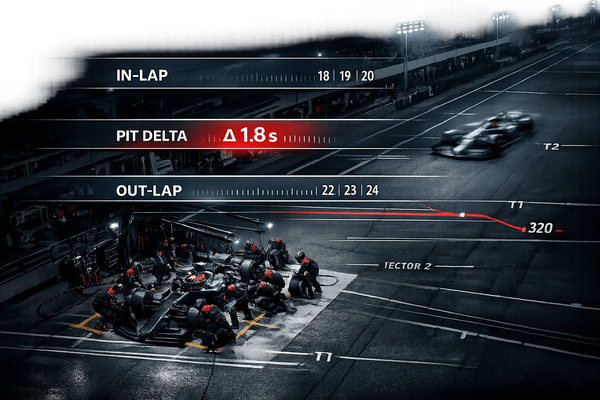

Tire Management and Pit Stop Timing

Tire strategy is one of the cornerstones of success in F1. RL models are now taking on this challenge by analyzing live telemetry data - such as tire wear, track temperatures, and gaps to competitors - to determine the best moments to pit.

For example, a Deep Recurrent Q-Network (DRQN)-based model processes critical data points like tire wear trends, lap time degradation, and fuel levels. This enables teams to make informed decisions about both pit stop timing and tire compound selection. Unlike traditional Monte Carlo simulations, these RL models excel at balancing immediate performance with long-term race strategy, offering a more dynamic approach.

Interestingly, the decisions suggested by these RL models often align with the instincts of seasoned strategists. Even better, they occasionally uncover strategic opportunities that might otherwise go unnoticed.

Real-Time Strategy Changes

RL goes beyond just pit stop timing - it’s also a powerful tool for responding to unexpected developments during a race. Whether it’s a safety car deployment, sudden weather changes, or a competitor’s retirement, RL systems can update their calculations in real time. Lap by lap, they reassess the race state, providing recommendations on whether to pit immediately, delay a planned stop, or switch tire compounds. The speed of these calculations far surpasses what human strategists can achieve manually.

This adaptability shines in mixed weather conditions, a notoriously tricky scenario for traditional models. For instance, when rain begins to fall, grip levels can change rapidly, making it difficult to decide on the optimal tire choice. RL systems, however, can process weather updates, track conditions, and competitor behaviors simultaneously, delivering strategy recommendations that keep teams ahead of the curve.

Case Study: RL Success in Recent Applications

One standout example of RL’s potential is the Race Strategy Reinforcement Learning (RSRL) model, developed by researchers like Devin Thomas and his team. In a simulated run of the 2023 Bahrain Grand Prix, the RSRL model managed cars expected to finish at an average position of P5.5 and improved their results to P5.33. While this improvement may seem minor, in F1’s fiercely competitive environment, even small gains can be the difference between earning points or leaving empty-handed.

What sets the RSRL model apart is its transparency. By using feature importance analysis and decision tree surrogates, it explains its strategic choices, addressing concerns about automation. The model also demonstrated impressive consistency across various tracks and conditions, performing well in scenarios spanning from 2021 to mid-2024. Its recommendations closely mirrored the strategies seen in professional races, reinforcing RL’s value as a decision-support tool.

Encouraged by these successes, F1 teams are increasingly collaborating with academic researchers to refine RL models, aiming to make them even more reliable and versatile for future race strategies.

Problems with RL in F1 Strategy

Reinforcement learning (RL) holds potential for shaping Formula 1 (F1) strategy, but it’s far from a perfect solution. The fast-paced, high-stakes world of F1 racing introduces challenges that make RL integration anything but straightforward. From data limitations to the unpredictable nature of races, teams face numerous hurdles when trying to combine RL with existing human and computer-driven strategies.

Data Quality and Access Issues

For RL models to succeed, they need access to detailed and reliable race data. Unfortunately, F1 teams often deal with fragmented data due to restricted access, sensor malfunctions, or incomplete records.

For instance, during live races, sensor failures can leave teams scrambling to make decisions based on human judgment alone. On top of that, privacy concerns and the competitive nature of the sport mean teams are often reluctant to share data, making it harder to build robust datasets that could improve RL models.

Understanding RL Decisions

Another major hurdle is the "black box" nature of RL systems - their recommendations can be difficult to interpret. In one simulation, justifying an algorithm's pit stop and tire choices required additional tools to explain its reasoning to team strategists. To address this, methods like feature importance analysis, decision tree–based surrogate models, and counterfactual explanations have been developed to make RL decisions more transparent.

However, these explainability tools add extra computational demands, and teams remain cautious about relying on RL systems without clear evidence of their reliability. For now, teams prefer to use RL as a supportive tool rather than as the sole decision-maker, especially when the stakes are high.

Working Across Different Tracks and Conditions

F1 circuits are incredibly diverse, with each track presenting unique challenges - different surface materials, corner designs, elevation changes, and weather conditions all play a role in shaping race strategy. Models trained on one track often need significant retraining to perform well on another.

Unpredictable events, like sudden rain, safety car deployments, or other race incidents, make it even harder for RL systems to adapt. Real-time adjustments are crucial, but they require processing large amounts of data almost instantly. Issues like data latency or incomplete information can slow down RL models, making them less effective in fast-moving situations.

As a result, most teams use RL systems as a complement to human expertise. While RL provides valuable insights, strategists often override or tweak its recommendations, especially in scenarios where ambiguity or risk is high.

What's Next for Reinforcement Learning in F1

As Formula 1 teams continue to push the boundaries of technology, reinforcement learning (RL) is set to play an even bigger role in shaping race strategies. By building on current advancements and addressing existing challenges, RL is poised to redefine how races are strategized, fought, and ultimately won.

Combining RL with Other AI Systems

The future of F1 strategy is heading toward a fusion of RL with other cutting-edge AI technologies. Teams are increasingly integrating RL with tools like computer vision to monitor competitor positions and detect subtle track changes in real time. Add predictive analytics to the mix, and you get a system capable of factoring in elements like weather forecasts and tire degradation. Together, these technologies create dynamic, real-time strategies that adapt to countless variables, processing hundreds of data points in mere seconds to deliver actionable insights.

For example, recent studies have shown that combining RL with predictive analytics and computer vision can take pit stop timing and tire selection to a whole new level, outperforming traditional simulation methods. To make these systems more transparent and trustworthy for strategists, explainable AI techniques, such as feature importance analysis and decision tree-based explanations, are being implemented. This ensures that the recommendations are not only effective but also understandable.

New Areas for RL Development

Beyond integration, RL is opening doors to entirely new applications in F1 that could reshape race strategies even further. One promising area is the optimization of energy recovery systems (ERS). With the planned removal of the MGU-H and the overhaul of F1's energy systems set for 2026, RL could help teams determine the best moments to deploy ERS for overtaking or defending, all while adapting to simplified regulations.

Fuel load optimization is another exciting opportunity. Instead of relying solely on pre-race calculations, RL systems could adjust fuel consumption dynamically during the race. By factoring in real-time conditions, competitor performance, and track characteristics, these systems could help teams decide when to adopt aggressive or conservative strategies.

Driver training is also being transformed by RL-powered simulators. These adaptive simulators can create personalized scenarios - like sudden safety car deployments or tricky overtaking situations - helping drivers identify weaknesses and tailor their training to their specific needs.

Another game-changing application is mid-race crisis management. RL systems, capable of processing multiple scenarios at once, could help teams make quick, optimal decisions in response to unpredictable events like safety cars, red flags, or sudden weather changes. This ability to adapt on the fly could prove invaluable during high-stakes moments.

Teams Working with Researchers

Collaboration between F1 teams and researchers is becoming increasingly important for advancing RL applications. These partnerships combine the practical expertise of racing professionals with the latest developments in AI research, accelerating progress. Universities and AI labs bring cutting-edge techniques to the table, while teams provide the real-world insights needed to fine-tune these methods for competitive racing.

One key area of focus is developing explainable RL frameworks. Researchers contribute their expertise in interpretability, while teams ensure these methods are practical and easy for strategists to understand and trust. These collaborations are also addressing data quality issues by establishing standardized formats and secure sharing protocols for training models. As F1 strategies grow more complex, these joint efforts will be essential for staying ahead of the competition. By improving transparency and reliability, these partnerships are setting the stage for a new era of data-driven decision-making in Formula 1.

Conclusion

Reinforcement learning (RL) has revolutionized how Formula 1 teams approach race strategy, shifting from experience-based decisions to dynamic, data-driven methods. By processing massive amounts of telemetry data and adapting strategies in real time, RL has proven its worth on the track. Models like RSRL have shown measurable improvements over traditional approaches, making RL a game-changer in motorsport.

The advantages are clear: RL enables teams to adjust tire choices and pit stop timing based on live race conditions, whether it’s a sudden downpour or a safety car deployment. For example, during a 2023 Bahrain simulation, the RSRL model improved finishing positions, highlighting RL’s potential. Additionally, RL systems analyze hundreds of variables in seconds, offering strategy teams real-time recommendations during races. However, these advancements come with challenges.

Issues like inconsistent data quality and the opaque nature of RL decisions - despite advancements in explainability tools - remain hurdles for teams. Another concern is ensuring that strategies effective on one circuit can be adapted to others without requiring extensive retraining.

Looking ahead, RL's applications are expanding into areas like managing energy recovery systems, optimizing fuel loads, and coordinating multi-car strategies. Overcoming the challenges of data reliability, transparency, and adaptability will be crucial for further progress. Collaboration between F1 teams and researchers is driving these advancements, blending racing expertise with cutting-edge AI research. These partnerships are paving the way for better explainable RL systems and improved data-sharing practices.

Reinforcement learning has grown from a novel concept to a practical tool reshaping race strategy. While challenges persist, its proven benefits and expanding uses suggest RL will continue to play a pivotal role in Formula 1. Teams that prioritize robust data systems, transparent models, and research collaborations will position themselves for long-term success in this competitive landscape.

FAQs

How is reinforcement learning used differently from traditional machine learning in F1 race strategy optimization?

Reinforcement learning (RL) takes a different approach from traditional machine learning by excelling in dynamic, fast-changing environments - just like those in Formula One racing. While traditional methods rely on static datasets to predict outcomes, RL thrives on trial-and-error simulations, learning the best strategies by actively interacting with a virtual race environment. This makes it highly effective at responding to variables like shifting weather conditions, tire wear, or unforeseen incidents during a race.

In the context of F1, RL algorithms can analyze a vast array of strategy options, such as deciding the ideal timing for pit stops or selecting the best tires for specific conditions. By running countless simulated scenarios, RL enables teams to make smarter, data-backed decisions that weigh risk against reward, offering a distinct advantage in the high-stakes, high-speed world of Formula One.

What challenges do F1 teams face when using reinforcement learning for race strategies?

F1 teams encounter numerous challenges when integrating reinforcement learning (RL) into race strategies. One of the biggest difficulties lies in managing the complexity of real-time decision-making during races. Factors like sudden weather changes, tire degradation, and unpredictable moves by competitors create a fast-moving environment where RL models need to process immense amounts of data and adapt on the fly. This level of responsiveness demands significant computational power.

Another obstacle is the accuracy of simulations used to train RL models. These simulations need to mirror actual race conditions as closely as possible. Even small inaccuracies can result in strategies that fall short on race day. On top of that, teams must navigate the constraints imposed by FIA regulations and the limited opportunities for testing during the season, which can restrict how much they can experiment with RL.

Even with these challenges, RL holds great potential for refining strategies and boosting performance on the track. It’s an area that continues to push the boundaries of what’s possible in Formula One.

How can reinforcement learning work alongside other AI technologies to improve F1 race strategies in the future?

Reinforcement learning can work hand-in-hand with other AI technologies, like predictive analytics and machine learning, to develop smarter and more responsive strategies for F1 racing. For instance, predictive models can evaluate factors such as weather conditions, tire wear, and fuel usage, while reinforcement learning algorithms adjust decisions in real time based on these insights.

By incorporating AI-powered simulations, teams can experiment with numerous scenarios and fine-tune their strategies long before race day. This combination of reinforcement learning and other AI tools has the potential to greatly improve race outcomes, offering teams a valuable advantage in the fast-paced and competitive world of Formula One.